QUANTUM COMPUTING

INTRODUCTION TO QUANTUM COMPUTING

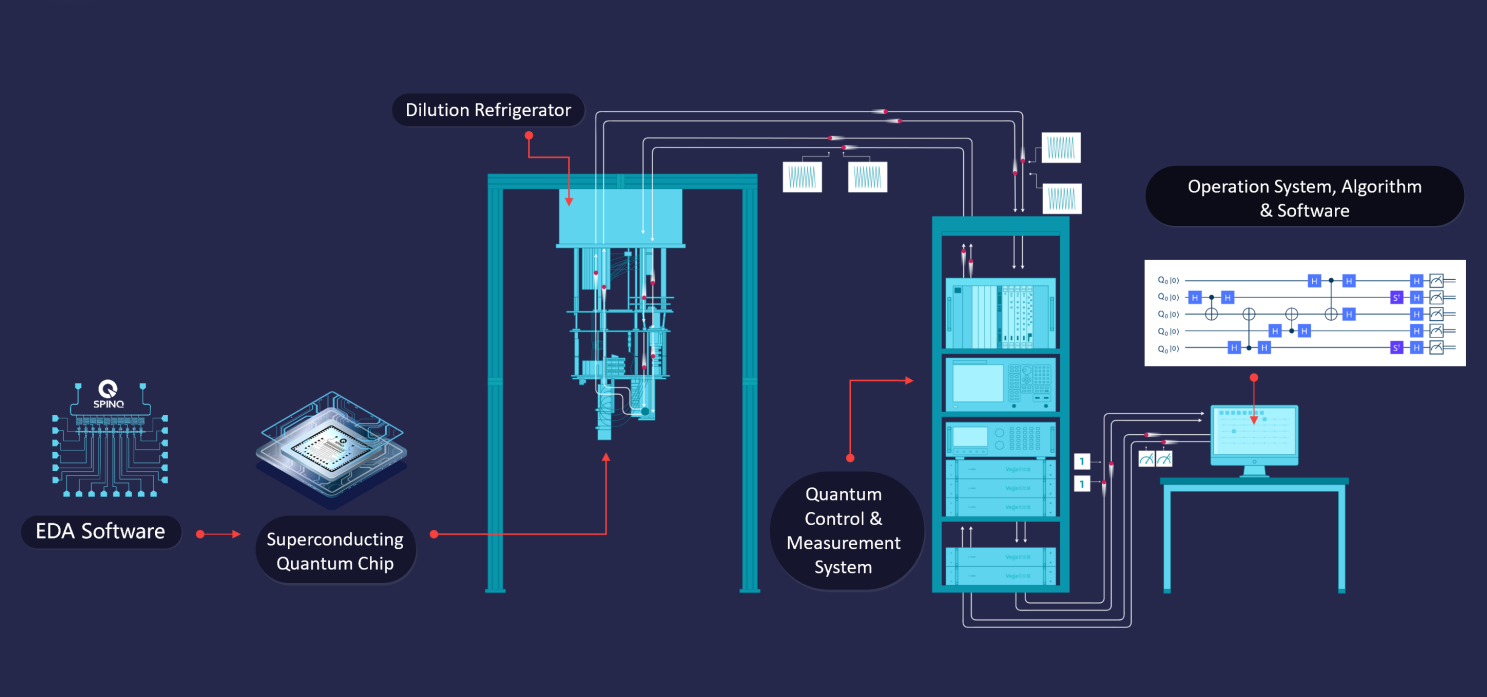

QUANTUM computing is a modern way of computing that is based on the science of quantum mechanics and its unbelievable phenomena. It is a beautiful combination of physics, mathematics, computer science and information theory. It provides high computational power, less energy consumption and exponential speed over classical computers by controlling the behavior of small physical objects i.e. microscopic particles like atoms, electrons, photons, etc. Here, we present an introduction to the fundamental concepts and some ideas of quantum computing. This paper starts with the origin of traditional computing and discusses all the improvements and transformations that have been done due to their limitations until now. Then it moves on to the basic working of quantum computing and the quantum properties it follows like superposition, entanglement and interference. To understand the full potentials and challenges of a practical quantum computer d commercially, the paper covers the architecture, hardware, software, design, types and algorithms that are specifically required by the quantum computers.

THE MECHNAICS OF QUANTUM COMPUTING

Quantum computing is a unique technology because it isn’t built on bits that are binary in nature, meaning that they’re either zero or one. Instead, the technology is based on qubits. These are two-state quantum mechanical systems that can be part zero and part one at the same time.

faster and more efficient

The quantum property of “superposition” when combined with “entanglement” allows N qubits to act as a group rather than exist in isolation, and therefore achieve exponentially higher information density (2^N) than the information density of a classical computer (N).

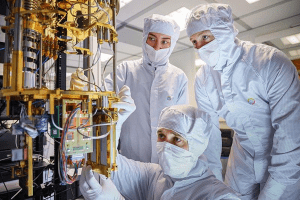

Although quantum computers have a significant performance advantage, system fidelity remains a weak point. Qubits are highly susceptible to disturbances in their environment, making them prone to error. Correcting these errors requires redundant qubits for error correction and extensive correction codes, however useful applications of so-called Noisy Intermediate Scale Quantum devices, or “NISQ’s” is proceeding very rapidly. Improving the fidelity of qubit operations is key to increasing the number of gates and the usefulness of quantum algorithms, as well as for implementing error correction schemes with reasonable qubit overhead

The evolution of quantum computing

Although the term quantum computing has only recently become a front and center topic in the public eye, the field of quantum information science has been around several decades. Paul Bein off described a quantum mechanical model of a computer in 1980. In his paper, titled “The computer as a physical system: A microscopic quantum mechanical Hamiltonian model of computers as represented by Turing machine In short, quantum computing can deliver better quality results faster. Here are several practical applications of quantum computing we could see in the future: AI and machine learning (ML). The capability of calculating solutions to problems simultaneously, as opposed to sequentially, has huge potential for AI and ML. es,” Bein off showed that computers could operate under the laws of quantum mechanics. Shortly thereafter, Richard Fey man (1982) wrote a paper titled “Simulating Physics with Computers” in which he looked at a range of systems that you might want to simulate and showed how they cannot be adequately represented by a classical computer, but rather by a quantum machine.

The future of quantum computing

As such a broad field, the applications of the technology are limitless. However, a few opportunity areas for the technology include artificial intelligence, chemical simulation, molecular modeling, cryptography, optimization, and financial modeling. Those potential applications are discussed in more detail below.

Around 80% of experts expect quantum advantage over high-performance conventional computing to be achieved within a decade for certain applications. This is in line with the forecasts of major players and progress around new methods to enable easier quantum/conventional hybrid solutions to be accessed.

Artificial intelligence

The combination of AI and quantum technology is often referred to as quantum artificial intelligence (QAI). Innovations in this space are essential for the development of algorithms used in computer vision, natural language processing (NLC).

Although developing computers powerful enough for these tasks is a formidable task, there has been significant innovation in the space, such as when IBM set a world record for quantum simulation.

Cryptography

The impact of quantum computing on cryptography has been established for more than two decades, beginning with the previously referenced work of Peter Shor that illustrated the threat quantum computing posed to common cryptographic standards.

Post-quantum cryptography, also known as quantum-resistant cryptography is an emerging field will grow exponentially in the coming years. The National Institute of Standards and Technology (NIST) and National Security Agency (NSA) have launched extensive initiatives to spur research and development, and eventually new standards, in the space.

Laying the groundwork for future innovations in quantum computing

Given the fact that quantum computing is only going to increase in complexity over time, it’s important for businesses and academic institutions alike to understand that the complexity of the quantum computing field makes it difficult for companies and academic institutions to develop innovations on their own.

In 2018, NIST joined forces with SRI International to form the Quantum Economic Development Consortium (QED-C) to support the development of the quantum industry. Led by Joe Broz (Vice President of SRI) as its Executive Director, the QED-C is a consortium of stakeholders working to create a robust commercial quantum industry and associated.

Features of Quantum Computing

Superposition and entanglement are two features of quantum physics on which quantum computing is based. They empower quantum computers to handle operations at speeds exponentially higher than conventional computers and with much less energy consumption.

ADVANTAGES OF QUANTUM COMPUTING

- The main advantage of quantum computing is that it is even classical algorithm calculations. They are also performed easily which is similar to the classical computer.

- If we adding the qubits to the register we increase its storage capacity exponentially.

- In this computing qubit is the conventional superposition state. So there are advantages of exponential speedup to the resulted by handle the number of calculations.

Disadvantages of Quantum computing:

- The research for this problem is still continuing the effort applied to identify a solution for this problem that has no positive progress.

- Qubits are not digital bits of the day thus they cannot use as conventional error correction.

- The main disadvantage of Quantum computing is the technology required to implement a quantum computer is not available at present days.

- The minimum energy requirement for quantum logical operations is five times that of classical.

- .I

Comments

Post a Comment